Overview: This post aims to provide an accessible introduction to AI for those with little to no prior knowledge of the subject. The book will cover the basics of AI, including its history, key concepts, and current applications. It will also address common misconceptions about AI and explore some of the ethical and social implications of the technology.

Chapter 1: Introduction to AI

Artificial intelligence, or AI, is a rapidly growing field that has the potential to revolutionize the way we live and work. At its core, AI is about creating machines that can perform tasks that would normally require human intelligence, such as recognizing speech, making decisions, and learning from experience. In this chapter, we'll explore the basics of AI and why it's becoming increasingly important in today's world.

What is AI?

AI is a broad field that encompasses a wide range of technologies, from simple rule-based systems to advanced machine learning algorithms. At its most basic level, AI is about creating machines that can perform tasks that would normally require human intelligence. These tasks can range from simple, repetitive tasks like sorting and filtering data to complex tasks like recognizing speech or driving a car.

One of the key features of AI is its ability to learn and improve over time. This is known as machine learning, and it involves training algorithms on large amounts of data so that they can recognize patterns and make predictions based on that data. Machine learning is used in a wide range of applications, from image and speech recognition to fraud detection and recommendation systems.

Why is AI Important?

AI is becoming increasingly important in today's world for a variety of reasons. One of the biggest reasons is its potential to automate many tasks that are currently performed by humans. This could lead to significant increases in productivity and efficiency, as well as cost savings for businesses.

AI also has the potential to improve decision-making in a wide range of fields. For example, in healthcare, AI could be used to analyze large amounts of patient data to identify patterns and risk factors for various diseases. In finance, AI could be used to analyze market trends and make more accurate predictions about future performance.

Finally, AI has the potential to create entirely new industries and job opportunities. As machines become more intelligent and capable, they will be able to perform tasks that were previously impossible or too difficult for humans. This could lead to new opportunities in fields like robotics, autonomous vehicles, and more.

The image above shows an illustration of an artificial intelligence robot. The robot is made up of various computer parts and has a glowing blue brain inside its head. This image is meant to convey the idea that AI is a complex and powerful technology that has the potential to change the world we live in.

Chapter 2: A Brief History of AI

Artificial intelligence has a long and fascinating history, with roots that can be traced back centuries. In this chapter, we'll take a look at some of the key milestones in the development of AI, from its earliest beginnings to the present day.

Early History of AI:

The history of AI can be traced back to the ancient Greeks, who created myths and stories about mechanical beings with human-like intelligence. In the centuries that followed, inventors and scientists continued to explore the idea of creating machines that could think and reason like humans.

One of the earliest examples of an "intelligent" machine was the Mechanical Turk, a chess-playing automaton that was created in the late 18th century. Although the Mechanical Turk was actually controlled by a hidden human operator, it sparked a fascination with machines that could perform complex tasks.

The Birth of Modern AI:

The birth of modern AI can be traced back to the 1940s and 1950s, when researchers began to develop the first electronic computers. These early computers provided a platform for researchers to explore the idea of creating machines that could learn and reason like humans.

One of the earliest pioneers of AI was John McCarthy, who coined the term "artificial intelligence" in 1956. McCarthy and his colleagues at Dartmouth College organized the first AI conference that same year, which brought together researchers from around the world to discuss the potential of AI.

Over the next few decades, researchers made significant progress in the field of AI. They developed algorithms for tasks like language translation, image recognition, and game-playing, and created machines that could perform these tasks with increasing accuracy.

Recent Advances in AI:

In recent years, advances in machine learning and other AI technologies have led to a new wave of excitement and interest in the field. Today, AI is being used in a wide range of applications, from virtual assistants like Siri and Alexa to self-driving cars and medical diagnosis tools.

The image above shows a statue of Alan Turing, one of the pioneers of computer science and artificial intelligence. Turing was instrumental in cracking the German Enigma code during World War II, and he also developed the concept of the "Turing Test" to determine whether a machine can exhibit intelligent behavior that is indistinguishable from that of a human. This statue is located at Bletchley Park in the UK, where Turing worked during the war.

Chapter 3: Types of AI

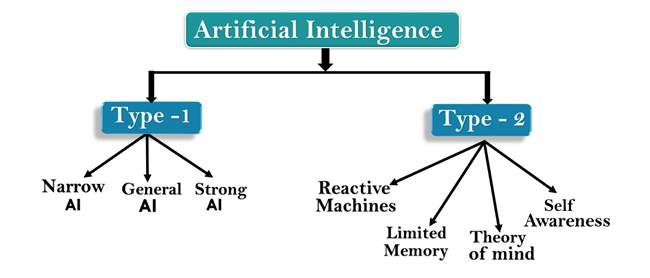

Artificial intelligence can be divided into several different categories based on the way it is designed to function. In this chapter, we'll take a look at some of the most common types of AI and how they are used in different applications.

Reactive Machines:

Reactive machines are the simplest form of AI and are designed to react to specific stimuli in a specific way. They do not have the ability to form memories or use past experiences to inform future actions. One example of a reactive machine is a chess-playing computer that can analyze the current state of the board and make the best move based on that information.

Limited Memory:

Limited memory AI systems are designed to use past experiences to inform future decisions. These systems are used in a wide range of applications, from recommendation engines that suggest products based on past purchases to fraud detection systems that analyze past behavior to identify potential risks.

Theory of Mind:

Theory of mind AI systems are designed to understand the intentions and beliefs of other agents. These systems are used in applications like natural language processing and social robotics, where it is important to understand the intentions and emotions of human users.

Self-Aware:

Self-aware AI systems are designed to have a sense of self-awareness and consciousness. This type of AI is still largely in the realm of science fiction, but researchers are exploring the possibility of creating machines that can think and reason like humans.

The image above shows a diagram that illustrates the different types of AI. The diagram shows the progression from reactive machines, which have no memory or past experience, to self-aware machines, which have a sense of self-awareness and consciousness. This image is meant to provide a visual representation of the different types of AI and how they are used in different applications.

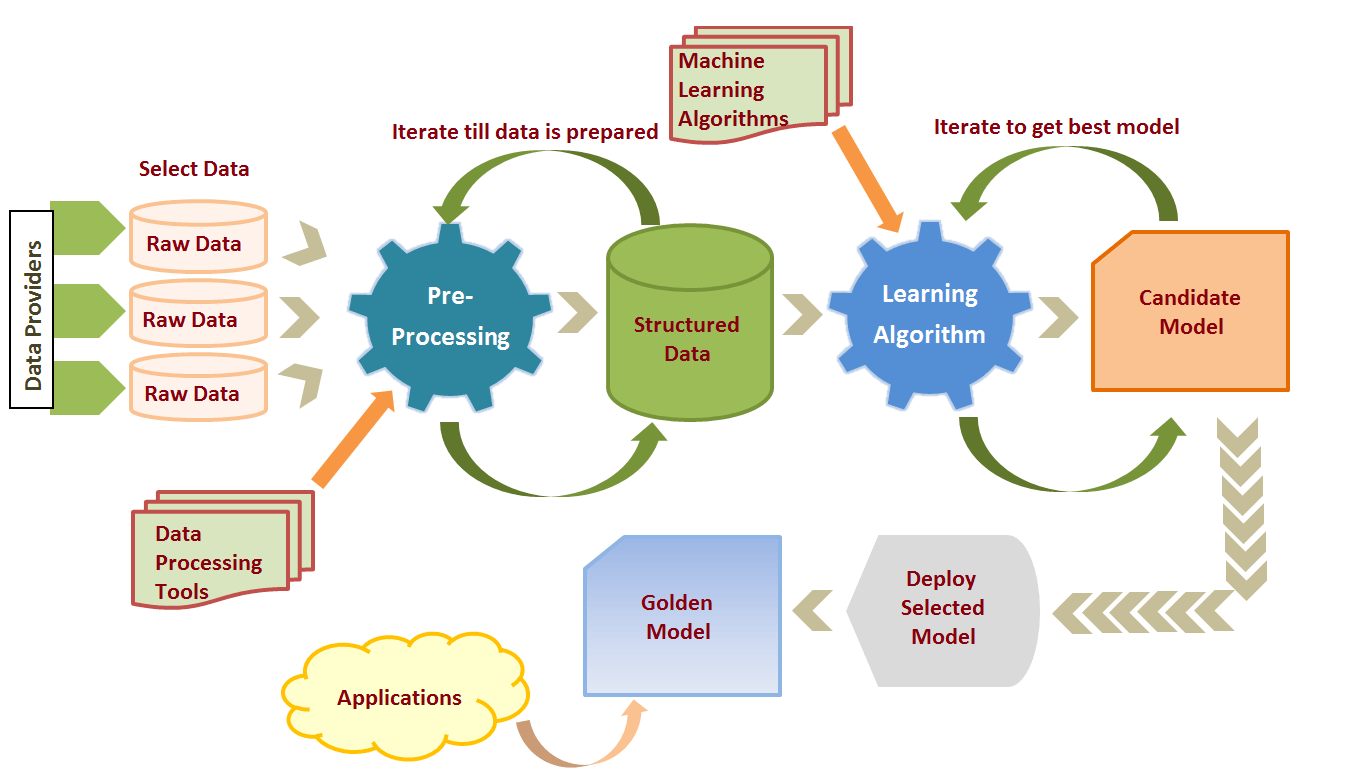

Chapter 4: Machine Learning

Machine learning is a subset of AI that involves training machines to learn from data, without being explicitly programmed. In this chapter, we'll take a closer look at what machine learning is, how it works, and some of the most common types of machine learning algorithms.

What is Machine Learning?

Machine learning is a type of AI that involves teaching machines to recognize patterns and make decisions based on data. In traditional programming, developers write code that specifies how a program should behave in different situations. In machine learning, however, developers train a model on a set of data and let the machine learn how to make decisions based on that data.

How Does Machine Learning Work?

There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

Supervised Learning:

Supervised learning involves training a model on a set of labeled data, where the desired output is already known. For example, a model might be trained to recognize images of cats and dogs, where each image is labeled as either "cat" or "dog." Once the model has been trained, it can be used to predict the labels of new, unlabeled data.

Unsupervised Learning:

Unsupervised learning involves training a model on a set of unlabeled data, where the desired output is not known. The model is tasked with finding patterns and relationships in the data without any guidance. This type of learning is often used in clustering and anomaly detection.

Reinforcement Learning:

Reinforcement learning involves training a model to make decisions in a dynamic environment. The model receives feedback in the form of rewards or penalties for each decision it makes, and it adjusts its behavior based on that feedback. This type of learning is often used in robotics and game-playing.

The image above shows a machine learning workflow, which illustrates the process of training a machine learning model. The data is first prepared and preprocessed, then it is split into training and testing sets. The model is trained on the training set, and its performance is evaluated on the testing set. Once the model is deemed to be accurate, it can be used to make predictions on new data. This image is meant to provide a visual representation of how machine learning works and the different steps involved in the process.

Chapter 5: Deep Learning

Deep learning is a subset of machine learning that involves training artificial neural networks to recognize patterns in data. In this chapter, we'll take a closer look at what deep learning is, how it works, and some of the most common applications of deep learning.

What is Deep Learning?

Deep learning is a type of machine learning that involves training artificial neural networks with multiple layers. These networks are designed to recognize patterns in data, using the connections between nodes to identify and extract features from complex datasets.

How Does Deep Learning Work?

Deep learning involves training a neural network on a large dataset, using backpropagation to adjust the weights and biases of the network until it accurately recognizes patterns in the data. The network is then tested on a separate set of data to evaluate its performance.

There are several types of neural networks used in deep learning, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and deep belief networks (DBNs).

Applications of Deep Learning:

Deep learning has a wide range of applications in fields such as computer vision, natural language processing, and speech recognition. Some of the most common applications of deep learning include:

Image and Video Recognition:

Deep learning is used to recognize and classify images and videos, allowing machines to identify and track objects in real-time. This has applications in fields such as self-driving cars and security systems.

Natural Language Processing:

Deep learning is used to process and analyze human language, allowing machines to understand and generate text. This has applications in fields such as virtual assistants and chatbots.

Speech Recognition::

Deep learning is used to recognize and transcribe speech, allowing machines to understand and respond to spoken commands. This has applications in fields such as voice assistants and call center automation.

The image above shows the architecture of a convolutional neural network (CNN), which is commonly used in image and video recognition. The network consists of several layers, including convolutional layers, pooling layers, and fully connected layers. The image is meant to provide a visual representation of how a CNN works and the different layers involved in the process.

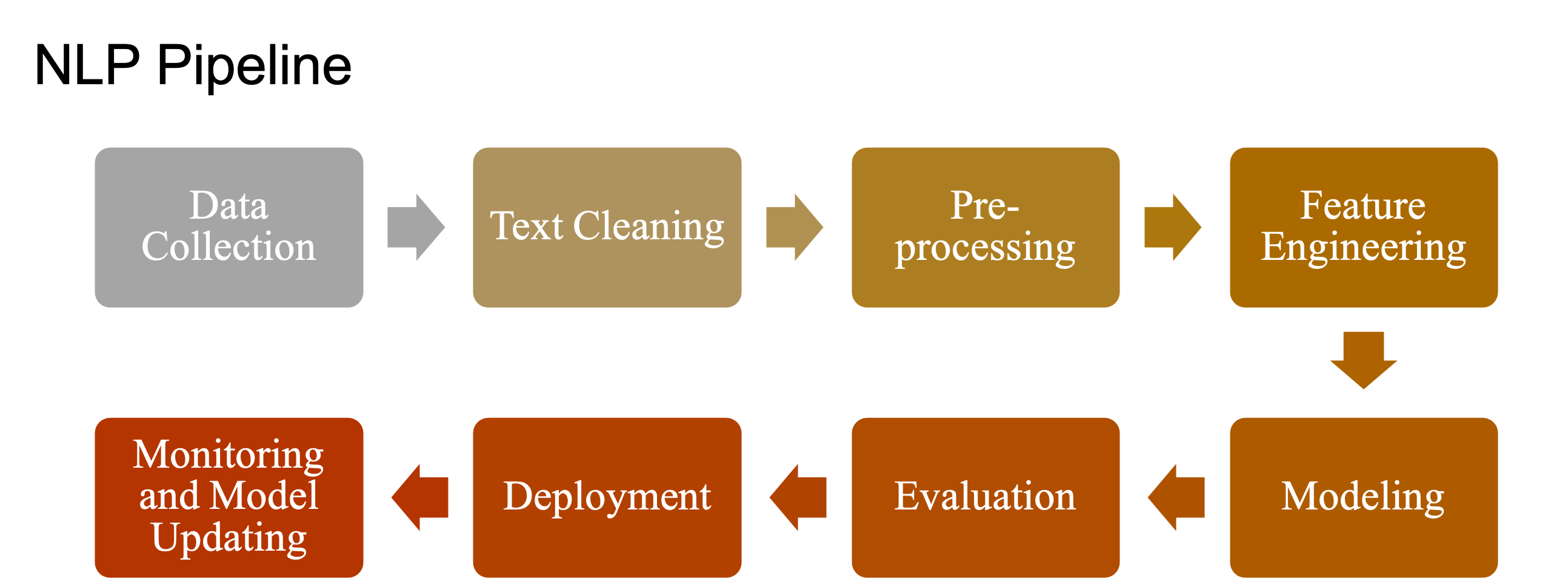

Chapter 6: Natural Language Processing

Natural Language Processing (NLP) is a field of AI that focuses on enabling machines to understand and interpret human language. In this chapter, we'll take a closer look at what NLP is, how it works, and some of the most common applications of NLP.

What is Natural Language Processing?

Natural Language Processing is a field of AI that focuses on enabling machines to understand and interpret human language. This involves analyzing and processing large amounts of textual data, identifying patterns and relationships between words and phrases, and using this information to generate responses and make decisions.

How Does Natural Language Processing Work?

NLP involves several key processes, including:

Tokenization: Breaking down a text into individual words, phrases, or sentences.

Part-of-speech (POS) tagging: Identifying the grammatical structure of each word in a sentence, such as whether it is a noun, verb, or adjective.

Named entity recognition (NER): Identifying named entities in text, such as people, organizations, and locations.

Sentiment analysis: Analyzing the tone and emotion behind a text, such as whether it is positive, negative, or neutral.

Applications of Natural Language Processing:

NLP has a wide range of applications in fields such as customer service, marketing, and healthcare. Some of the most common applications of NLP include:

Chatbots and Virtual Assistants:

NLP is used to power chatbots and virtual assistants, allowing them to understand and respond to natural language queries from users.

Sentiment Analysis:

NLP is used to analyze customer feedback and social media posts to understand sentiment and identify areas for improvement.

Text Summarization and Translation:

NLP is used to summarize and translate large volumes of text, such as news articles or legal documents.

The image above shows a natural language processing pipeline, which illustrates the different stages of processing involved in analyzing and interpreting text. The pipeline consists of several stages, including tokenization, POS tagging, NER, and sentiment analysis. The image is meant to provide a visual representation of how NLP works and the different stages involved in the process.

Chapter 7: Reinforcement Learning

Reinforcement learning is a type of machine learning that involves training an AI agent to make decisions in an environment based on trial and error. In this chapter, we'll take a closer look at what reinforcement learning is, how it works, and some of the most common applications of reinforcement learning.

What is Reinforcement Learning?

Reinforcement learning is a type of machine learning that involves training an AI agent to make decisions in an environment based on trial and error. The agent receives feedback in the form of rewards or punishments for each action it takes, and uses this feedback to adjust its decision-making process over time.

How Does Reinforcement Learning Work?

Reinforcement learning involves several key components, including:

The Environment: The environment in which the AI agent operates, such as a game or simulation.

The Agent: The AI agent that interacts with the environment and makes decisions based on trial and error.

Rewards: The feedback that the agent receives from the environment, in the form of rewards or punishments.

Policies: The decision-making process used by the agent to choose actions based on the current state of the environment.

Applications of Reinforcement Learning:

Reinforcement learning has a wide range of applications in fields such as robotics, gaming, and finance. Some of the most common applications of reinforcement learning include:

Game Playing:

Reinforcement learning is used to train AI agents to play games such as chess and Go, allowing them to develop complex strategies and compete with human players.

Robotics:

Reinforcement learning is used to train robots to perform complex tasks, such as navigating through an environment or manipulating objects.

Finance:

Reinforcement learning is used to develop trading algorithms that can make decisions based on market conditions and other factors.

The image above shows the process of reinforcement learning, which involves the interaction between the agent, environment, rewards, and policies. The image is meant to provide a visual representation of how reinforcement learning works and the different components involved in the process.